Research

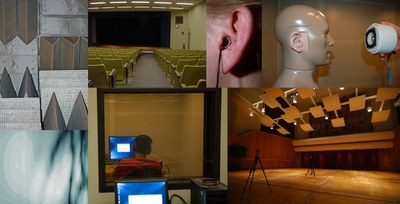

ZAPlab studies human auditory perception and performance, with a focus on sound localization and spatial hearing in complex listening situations. Primary research areas include:

Auditory distance perception: The perception of sound source distance provides spatial information to the listener that in certain circumstances can be critical for survival, such as determining the proximity of a predator or prey, particularly in the dark or when sound sources are outside the field of view. It is surprising, therefore, that auditory distance perception has been understudied relative to directional sound localization. This gap in knowledge has motivated our work in this area. Central findings from our research include a demonstration of the independence of sound source loudness and the perception of source distance, demonstration of the importance of reflected/reverberant sound for distance perception, and the demonstration that although humans can flexibly combine information from multiple acoustic cues to distance, systematic biases in perceived distance exist. Newer work has shown that monaural distance coding in humans depends critically on both reverberation and amplitude modulation characteristics of the sound source, and that these two stimulus attributes work in concert to code distance within neurons of the rabbit inferior colliculus.

Zahorik, P. & Wightman, F. L. (2001). Loudness constancy with varying sound source distance. Nature Neuroscience, 4(1), 78-83.

Zahorik, P. (2002). Direct-to-reverberant energy ratio sensitivity. Journal of the Acoustical Society of America, 112(5), 2110-2117.

Zahorik, P. (2002). Assessing auditory distance perception using virtual acoustics. Journal of the Acoustical Society of America, 111(4), 1832-1846.

Kim, D. O., Zahorik, P., Bishop, B, Carney, L., & Kuwada, S. (2015). Auditory distance coding: rabbit midbrain neurons and human perception. Journal of Neuroscience, 35(13), 5360-5372. PMCID: PMC4381006

Speech understanding in acoustically reflective environments: From our work on auditory distance perception, it is clear that reverberation plays a very important role in the auditory perception of sound source distance. On the other hand, reverberation has long been known to degrade speech understanding. The degradation is most evident in high levels of reverberation and for those with hearing impairment. For individuals with normal hearing in environments with moderate levels of reverberation, however, speech understanding is surprisingly unaffected. To understand this apparent paradox, we examined the role of listening exposure to reverberant sound fields. We have found that for normally hearing listeners in moderately reverberant environments, speech understanding improves significantly with brief prior listening exposure, suggesting a form of perceptual adaptation to the reverberant sound field. The adaptation seems to be driven by the interaction of the speech amplitude envelope with the reverberant sound field, but the details of this process are, as yet, unknown. Understanding this process and the potential interaction with binaural processing will provide important guidance for design of hearing aids and assistive devices that improve speech understanding in reverberation, while maintaining known sound quality benefits of reverberation.

Brandewie, E. & Zahorik, P. (2010). Prior listening in rooms improves speech intelligibility. Journal of the Acoustical Society of America, 128(1), 291-299. PMCID: PMC2921430

Srinivasan, N. K. & Zahorik, P. (2013). Prior listening exposure to a reverberant room improves open-set intelligibility of high-variability sentences. Journal of the Acoustical Society of America, 133(1), EL33-EL39. PMCID: PMC3555507

Srinivasan, N. K., & Zahorik, P. (2014). Enhancement of speech intelligibility in reverberant rooms: Role of amplitude envelope and temporal fine structure. Journal of the Acoustical Society of America, 135(6), EL239-EL245. PMCID: PMC4032445

Zahorik, P. & Brandewie, E. (2016). Speech intelligibility in rooms: Effect of prior listening exposure interacts with room acoustics. Journal of the Acoustical Society of America, 140(1), 74-86. PMCID: pending

Multisensory integration in auditory space perception: Our previous work in auditory distance perception indicates that perceived distance in humans is systematically biased: far sound source distances are underestimated, and near source distances are overestimated. This is in stark contrast to visual depth perception, where distance estimates are highly accurate. We have shown that the addition of visual depth information can dramatically improve auditory distance accuracy and also lower the variability in auditory distance judgments. Similarly, we have shown in the domain of directional sound localization that multisensory input over time can facilitate improved accuracy by reducing front/back confusion errors. These results provide important groundwork for developing methods of improving orientation and navigation abilities in humans when information from a sensory modality is missing or degraded, such as for blind navigation using sound.

Zahorik, P. (2001). Estimating sound source distance with and without vision. Optometry and Vision Sciences, 78(5), 270-275.

Zahorik, P., Bangayan, P., Sundareswaran, V. Tam, C., & Wang, K., (2006). Perceptual recalibration in human sound localization: Learning to resolve front-back confusions. Journal of the Acoustical Society of America,120(1), 343-359.

Wolbers, T., Zahorik, P., & Giudice, N.A. (2011). Decoding the direction of auditory motion from BOLD responses in blind humans. Neuroimage, 56(2), 681-687.

Anderson, P. W., &Zahorik, P. (2014). Auditory/visual distance estimation: accuracy and variability. Frontiers in Auditory Cognitive Neuroscience, 5, 1097. PMCID: PMC4188027

Virtual auditory space technology and validation: Virtual auditory space (VAS) technology enables accurate and precisely controllable simulation of listening experiences that mimic those resulting from real sound sources in real sound fields. This technology has been transformational for the study of spatial hearing. Our research has made extensive use of VAS technology and has been involved in various efforts to validate, improve, and apply the technology to new areas of study. For example, we were the first to demonstrate that highly controlled VAS methods can result in virtual sounds that are indistinguishable from real sound sources. This is the strongest form of validation for the VAS method. We were also the first to apply VAS technology to the study of auditory distance perception. More recently, we have validated the use of VAS technology for simulating the relevant physical and perceptual attributes of small room acoustics. Application of VAS technology will continue to have a major impact on the field of hearing science.

Zahorik, P., Wightman, F. L., & Kistler, D. J. (1995). On the discriminability of virtual and real sound sources. Applications of Signal Processing to Audio and Acoustics,IEEE ASSP Workshop on Applications of Signal Processing to Audio and Acoustics. New York: IEEE Press. 76-79. DOI: 10.1109/ASPAA.1995.482951

Zahorik, P. (2000). Limitations in using Golay codes for head-related transfer function measurement. Journal of the Acoustical Society of America, 107(3), 1793-1796.

Zahorik, P. (2002). Assessing auditory distance perception using virtual acoustics. Journal of the Acoustical Society of America, 111(4), 1832-1846.

Zahorik, P. (2009). Perceptually relevant parameters for virtual listening simulation of small room acoustics. Journal of the Acoustical Society of America, 126(2), 776-791. PMCID: PMC2730711

Hearing Impairment: We have been interested in a variety of topics including: potential correlates to “hidden” hearing loss, binaural phase sensitivity, and the effects of binaurally-linked dynamic range compression in hearing aids. These studies represent collaboration between the Heuser Hearing Institute, Sonova, and the University of Louisville. We have also collaborated with Dr. Paul Reinhart and Dr. Pam Souza in studies related to the effects of reverberation on hearing aid compression and processing strategies.

Shehorn, J., Strelcyk, O., & Zahorik, P. (2020). Associations between speech recognition at high levels, the middle ear muscle reflex and noise exposure in individuals with normal audiograms. Hearing Research, 107982. https://doi.org/10.1016/j.heares.2020.107982.

Strelcyk, O., Zahorik, P., Shehorn, J., Patro, C., & Derleth, R. P. (2019). Sensitivity to interaural phase in older hearing-impaired listeners correlates with nonauditory trail making scores and with a spatial auditory task of unrelated peripheral origin. Trends in Hearing, 23. https://doi.org/10.1177/2331216519864499.

Dillard, B., Shehorn, J., Strelcyk, O., & Zahorik, P. (2019). SSQ confirms self-reported hearing difficulties for listeners with normal audiograms, American Academy of Audiology, Annual Conference, Columbus, OH.

Lorenz, S., Shehorn, J., Strelcyk, O., & Zahorik, P. (2019). Measures of cognitive ability in listeners with self-reported hearing difficulties but normal audiograms, American Academy of Audiology, Annual Conference, Columbus, OH.

Vajda, R., Nunn, H., Shehorn, J., Strelcyk, O., & Zahorik, P. (2019). Spatial hearing deficits in normal-hearing listeners that self-report hearing difficulties, American Academy of Audiology, Annual Conference, Columbus, OH.

Reinhart, P., Zahorik, P., & Souza, P. E. (2017). Effects of reverberation, background talker number, and compression release time on signal-to-noise ratio. Journal of the Acoustical Society of America, 142(1), EL130-EL135. PMCID: PMC5724723

Reinhart, P., Zahorik, P., & Souza, P. E. (2019). Effects of reverberation on the relationship between compression speed and working memory for speech-in-noise perception. Ear and Hearing. doi: 10.1097/AUD.0000000000000696.

Reinhart, P., Zahorik, P., & Souza, P. E. (2020). Interactions between digital noise reduction and reverberation: Acoustic and behavioral effects. Journal of the American Academy of Audiology, 31(1), 17-29.